Download this Article

What Is Machine Learning?

We do not know exactly which people are likely to buy a particular product, or which author to suggest to people who enjoy reading Hemingway. If we knew, we would not need any analysis of the data; we would just go ahead and write down the code. But because we do not, we can only collect data and hope to extract the answers to these and similar questions from data.

We do believe that there is a process that explains the data we observe. Though we do not know the details of the process underlying the generation of data-for example, consumer behavior-we know that it is not completely random. People do not go to supermarkets and buy things at random. When they buy beer, they buy chips; they buy ice cream in summer and spices for Ghihwein in winter. There are certain patterns in the data.

We may not be able to identify the process completely, but we believe we can construct a good and useful approximation. That approximation may not explain everything, but may still be able to account for some part of the data. We believe that though identifying the complete process may not be possible, we can still detect certain patterns or regularities. This is the niche of machine learning.

Application of machine learning methods to large databases is called data mining.

But machine learning is not just a database problem; it is also a part of artificial intelligence. To be intelligent, a system that is in a changing environment should have the ability to learn. If the system can learn and adapt to such changes, the system designer need not foresee and provide solutions for all possible situations.

The model may be predictive to make predictions in the future, or descriptive to gain knowledge from data, or both.

Examples of Machine Learning Applications

Learning Associations:

In the case of retail-for example, a supermarket chain-one application of machine learning is basket analysis, which is finding associations between products bought by customers: If people who buy X typically also buy Y, and if there is a customer who buys X and does not buy Y, he or she is a potential Y customer. Once we find such customers, we can target them for cross-selling.

In finding an association rule, we are interested in learning a conditional probability of the form where is the product we would like to condition on , which is the product or the set of products which we know that the customer has already purchased.

Let us say, going over our data, we calculate that = 0.7. Then, we can define the rule:

70 percent of customers who buy beer also buy chips

We may want to make a distinction among customers and toward this, estimate where is the set of customer attributes, for example, gender, age, marital status, and so on, assuming that we have access to this information.

Classification:

In credit scoring (Hand 1998), the bank calculates the risk given the amount of credit and the information about the customer. The information about the customer includes data we have access to and is relevant in calculating his or her financial capacity-namely, income, savings, collaterals, profeSSion, age, past financial history, and so forth. The bank has a record of past loans containing such customer data and whether the loan was paid back or not. From this data of particular applications, the aim is to infer a general rule coding the association between a customer’s attributes and his risk. That is, the machine learning system fits a model to the past data to be able to calculate the risk for a new application and then decides to accept or refuse it accordingly.

This is an example of a classification problem where there are two classes: low-risk and high-risk customers. The information about a customer makes up the input to the classifier whose task is to assign the input to one of the two classes.

A discriminant function, is a function that separates the examples of different classes. This type of applications is used for prediction.

There are many applications of machine learning in pattern recognition. One is optical character recognition, which is recognizing character codes from their images.

In medical diagnosis, the inputs are the relevant information we have about the patient and the classes are the illnesses. The inputs contain the patient’s age, gender, past medical history, and current symptoms. Some tests may not have been applied to the patient, and thus these inputs would be missing. Tests take time, may be costly, and may inconvience the patient so we do not want to apply them unless we believe that they will give us valuable information. In the case of a medical diagnosis, a wrong decision may lead to a wrong or no treatment, and in cases of doubt it is preferable that the classifier reject and defer decision to a human expert.

Learning also performs compression in that by fitting a rule to the data, we get an explanation that is simpler than the data, requiring less memory to store and less computation to process. Once you have the rules of addition, you do not need to remember the sum of every possible pair of numbers.

Another use of machine learning is outlier detection, which is finding the instances that do not obey the rule and are exceptions. In this case, after learning the rule, we are not interested in the rule but the exceptions not covered by the rule, which may imply anomalies requiring attention for example, fraud.

Regression:

Let us say we want to have a system that can predict the price of a used car. Inputs are the car attributes-brand, year, engine capacity, milage, and other information-that we believe affect a car’s worth. The output is the price of the car. Such problems where the output is a number are regression problems.

Both regression and classification are supervised learning problems where there is an input, X, an output, Y, and the task is to learn the mapping from the input to the output. The approach in machine learning is that we assume a model defined up to a set of parameters: .

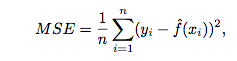

where is the model and are its parameters. is a number in regression and is a class code (e.g., 0/1) in the case of classification. is the regression function or in classification, it is the discriminant function separating the instances of different classes. The machine learning program optimizes the parameters, , such that the approximation error is minimized, that is, our estimates are as close as possible to the correct values given in the training set.

One can envisage other applications of regression where one is trying to optimize a function. Let us say we want to build a machine that roasts coffee. The machine has many inputs that affect the quality: various settings of temperatures, times, coffee bean type, and so forth. We make a number of experiments and for different settings of these inputs, we measure the quality of the coffee, for example, as consumer satisfaction. To find the optimal setting, we fit a regression model linking these inputs to coffee quality and choose new points to sample near the optimum of the current model to look for a better configuration. We sample these points, check quality, and add these to the data and fit a new model. This is generally called response surface design.

Unsupervised Learning:

In supervised learning, the aim is to learn a mapping from the input to an output whose correct values are provided by a supervisor. In unsupervised learning, there is no such supervisor and we only have input data. The aim is to find the regularities in the input. There is a structure to the input space such that certain patterns occur more often than others, and we want to see what generally happens and what does not. In statistics, DENSITY ESTIMATION this is called density estimation.

One method for density estimation is clustering where the aim is to find clusters or groupings of input. In the case of a company with a data of past customers, the customer data contains the demographic information as well as the past transactions with the company, and the company may want to see the distribution of the profile of its customers, to see what type of customers frequently occur. In such a case, a clustering model allocates customers similar in their attributes to the same group, providing the company with natural groupings of its customers. Once such groups are found, the company may decide strategies, for example, services and products, specific to different groups. Such a grouping also allows identifying those who are outliers, namely, those who are different from other customers, which may imply a niche in the market that can be further exploited by the company.

An interesting application of clustering is in image compression. In this case, the input instances are image pixels represented as RGB values. A clustering program groups pixels with similar colors in the same group, and such groups correspond to the colors occurring frequently in the image. If in an image, there are only shades of a small number of colors and if we code those belonging to the same group with one color, for example, their average, then the image is quantized. Let us say the pixels are 24 bits to represent 16 million colors, but if there are shades of only 64 main colors, for each pixel, we need 6 bits instead of 24. For example, if the scene has various shades of blue in different parts of the image, and if we use the same average blue for all of them, we lose the details in the image but gain space in storage and transmission. Ideally, one would like to identify higher-level regularities by analyzing repeated image patterns, for example, texture, objects, and so forth. This allows a higher-level, simpler, and more useful description of the scene, and for example, achieves better compression than compressing at the pixel level.

One application area of computer science in molecular biology is alignment, which is matching one sequence to another. This is a difficult string matching problem because strings may be quite long, there are many template strings to match against, and there may be deletions, insertions, and substitutions. Clustering is used in learning motifs, which are sequences of amino acids that occur repeatedly in proteins. Motifs are of interest because they may correspond to structural or functional elements within the sequences they characterize. The analogy is that if the amino acids are letters and proteins are sentences, motifs are like words, namely, a string of letters with a particular meaning occurring frequently in different sentences.

Reinforcement Learning:

In some applications, the output of the system is a sequence of actions. In such a case, a single action is not important; what is important is the policy that is the sequence of correct actions to reach the goal. an action is good if it is part of a good policy. In such a case, the machine learning program should be able to assess the goodness of policies and learn from past good action sequences to be able to generate a policy. Such learning methods are called reinforcement learning algorithms.

A good example is game playing where a single move by itself is not that important; it is the sequence of right moves that is good. A move is good if it is part of a good game playing policy.

A robot navigating in an environment in search of a goal location is another application area of reinforcement learning. At any time, the robot can move in one of a number of directions. After a number of trial runs, it should learn the correct sequence of actions to reach to the goal state from an initial state, doing this as quickly as possible and without hitting any of the obstacles.

One factor that makes reinforcement learning harder is when the system has unreliable and partial sensory information. For example, a robot equipped with a video camera has incomplete information and thus at any time is in a partially observable state and should decide taking into account this uncertainty. A task may also require a concurrent operation of multiple agents that should interact and cooperate to accomplish a common goal. An example is a team of robots playing soccer.

Notes

Induction is the process of extracting general rules from a set of particular cases.

In statistics, going from particular observations to general descriptions is called inference and learning is called estimation. Classification is called discriminant analysis in statistics.

In engineering, classification is called pattern recognition and the approach is nonparametric and much more empirical.

Machine learning is related to artificial intelligence (Russell and Norvig 1995) because an intelligent system should be able to adapt to changes in its environment.

Data mining is the name coined in the business world for the application of machine learning algorithms to large amounts of data (Weiss and Indurkhya 1998). In computer science, it is also called knowledge discovery in databases (KDD).

Chapter’s Important Keywords:

- Machine Learning.

- Data Mining.

- Descriptive Model.

- Predictive Model.

- Association Rule.

- Classification.

- Discriminant Function.

- Knowledge Extraction.

- Compression.

- Outlier Detection.

- Supervised Learning.

- Response Surface Design.

- Density Estimation.

- Clustering.

- Reinforcement Learning.

- Partially Observable State.

- Induction.

- Inference.

- Estimation.

- Discriminant Analysis.

- Knowledge Discovery in Databases.